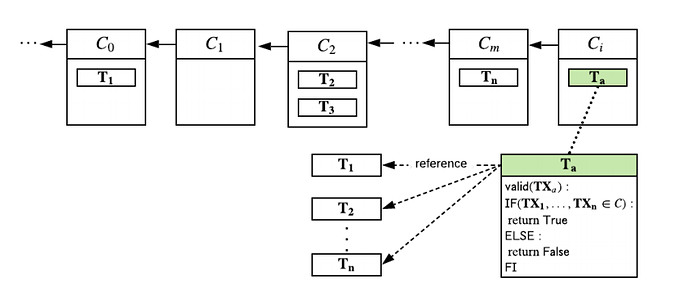

Using header_deps, we can use CKB to capture previous block headers and accumulate them into a “Difficulty MMR” as described in the FlyClient white paper, forming the base of a super-light client that can be implemented today without consensus changes. More information about this idea can be found here.

The light client can request the latest MMR commitment transaction and with adequate SPV-style block confirmation be certain of that commitment transaction’s validity.

The MMR root produced through this transaction provides the light client the ability to validate any prior block’s inclusion in the chain via a Merkle path. (header deps do lag the chain tip by 4 epochs, SPV-style verification can be used for any blocks in this window)

To validate the integrity of this value, the light client does FlyClient-style validation, requesting random blocks (randomness based on latest block header) and proofs of their inclusion in the MMR root.

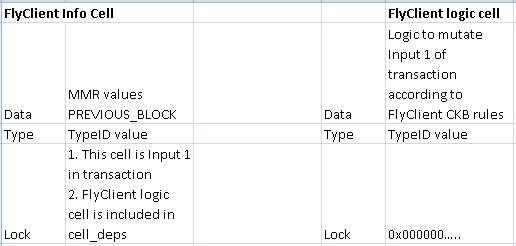

FlyClient CKB info cell

The implementation of FlyClient on CKB begins with an “anyone can unlock” cell that allows for a user to add to the MMR. More information about MMRs or “Merkle Mountain Ranges” can be found here: 1, 2, 3, 4.

FlyClient uses a “Difficulty MMR” to capture difficulty information at each MMR node and use it later to do difficulty-based sampling (so sampling is spread according to accumulated difficulty instead of number of blocks). The maximum capacity the data of this cell would occupy is 3,103 bytes.

The cell contains the following fields:

-

byte32 MMR_ROOT //current MMR root

-

struct MMR_PEAK {

byte32 peakValue //root of sub-tree

uint128 accumulatedDifficulty //accumulated difficulty of sub-tree below the peak

}

-

uint8 HIGHEST_PEAK //the highest peak of the mountain range

-

uint64 PREVIOUS_BLOCK //previous block processed by cell

A type script should enforce the following constraints:

- The cell is used as input 1

- The transaction contains 1 input cell and 1 output cell or 2 input cells and 2 output cells (no fee vs fee paid)

- The

header_depsreferenced begin at (PREVIOUS_BLOCK+1) - Input 1’s lock script is equal to lock script applied to output 1

- Capacity of input 1 is equal to capacity of output 1

The type script of this cell will allow any user to use header_deps to read the block(s) following PREVIOUS_BLOCK, add them to the MMR and store the accumulated difficulty value.

The type script could require a delay of a certain number of blocks since the last commitment to reduce the frequency of commitment transactions.

MMR_ROOT is a linked list of all current peaks, as recently recommended by Peter Todd (inventor of MMR).

FlyClient CKB proofs

Process for delivering FlyClient CKB proofs:

-

A client will request the latest block header from full nodes;

-

Full node will deliver the latest MMR commitment transaction, along with a Merkle path proving its inclusion in a commitment root in a valid block;

-

Full node will deliver all block headers (SPV-style verification) between the block referenced in (2) and the latest block header;

(

header_depscan only read header information following 4 epochs of block confirmation) -

Full node will deliver Merkle paths to show inclusion of the randomly sampled blocks in the MMR root contained in (2). Randomness is derived from a hash of the latest block header (FlyClient protocol).

This technique produces proofs of about 2 megabytes, more information about proof size and additional details can be found here.

Censorship attacks

This solution is unfortunately prone to miner censorship of transactions. If miners are censoring a commitment transaction, the light client will fall back to SPV verification since the last commitment. Commitments will continue once the censorship ends.

If CKB is prone to censorship attacks, we are in an unfortunate situation overall.

Considerations for more robust implementation

In the interest of improving this, we can examine consensus rule changes to ensure this data is always updated.

In Grin and Beam, miners commit to the previous MMR state instead of previous block in the block header. CKB consensus rules could be changed to commit to previous MMR state (instead of previous block) or an additional field could be added to the block header for the MMR.

Putting hard fork considerations aside, the MMR value is a compressed value, rather than data the node has (blocks). In thinking about this, I have an intuition that it complicates fork resolution and feel fairly certain that it complicates uncle validation, would greatly appreciate thoughts from anyone who has examined this.

From RFC0020- “Our uncle definition is different from that of Ethereum, in that we do not consider how far away the two blocks’ first common ancestor is, as long as the two blocks are in the same epoch.”

If 1) an extra value is added to the block header: to validate the MMR value in an uncle header, a node would need the peak values of the MMR in the previous block, or if 2) previous block commitment is changed to MMR commitment: the node would need the MMR values at the point a fork occurred in order to validate the MMR value being committed to in the second block of the fork.

A second path to improve robustness is adding a consensus rule via soft fork adopting the transaction-based solution, this is described in Section 8.3 of the FlyClient paper. The rule would require that the second transaction of a valid block uses the on-chain contract to add the previous block (which is in the block header) to the MMR.

Looking forward to your observations and optimizations, this also needs a cool name, I am inspired by CKB’s unique ability to “see itself”, so thinking about things around eyes, and introspection!

*Many thanks to jjy, Cipher, Jan and Tannr for entertaining my thoughts at various times around the idea of using CKB to capture data for light clients.

Sounds like a perfect grants project.

Sounds like a perfect grants project.