(Updated September 2020)

Title: Automated Stratum V2 mining pool for Nervos

Motivation:

The Stratum protocol V2 offers significant improvements to infrastructure efficiency, pool security, and miner privacy. We will implement this latest mining tech into an open-source pool backend system to attract more miners to secure Nervos, and encourage ASIC firmware developers to support V2. Furthermore, we will develop infrastructure-as-code automated deployments to lower technical barriers and facilitate the entry of new pools into the ecosystem.

The Stratum V2 protocol adds several new features and optimizations. Efficiency is increased through judicious selection of what data is broadcast, when it is sent, and how it is encoded. For example, job assignments will begin upon opening a channel, eliminating the unnecessary miner subscription step in V1. Cleverly, a job can be sent separately from the hash of the previous block, which enables miners to plan ahead and begin mining on a cached block template as soon as the previous hash received. Further efficiency is gained by using a binary rather than json encoding, which significantly reduces message size.

This efficiency is complemented and enhanced by flexibility. The specification includes protocols giving miners the ability to choose their own work (transaction set). Or, mining devices can operate in a simplified but more efficient mode via header-only mining. Flexibility along with extensive support for proxies and highly efficient communication channels enables backend infrastructures to maximize productive use of resources while still accommodating a range of miners as well as firmwares / hardware targets. As is a design goal, a balance is achieved between efficiency and decentralization.

Miners’ security and privacy are enhanced by implementing AEAD (authenticated encryption with associated data) to hinder network-level surveillance and prevent hashrate hijacking. The miners can also influence which transactions are included in the block, which is beneficial for network decentralization and censorship resistance.

We will implement a compliant base of Stratum V2 requirements and features into the open-source BTCpool backend system, which supports the Nervos Eaglesong hash function and ckb blockchain. We will contribute or integrate our work into upstream repositories. Deployments will be automated using a combination of Terraform, Terragrunt, and Ansible on both VMs, Kubernetes, and managed services. Users will then be able to quickly adopt the necessary infrastructure components to run the stack independently.

Project Milestones and Timeline

The Stratum V2 protocol is designed to accommodate making the stratum v1/ad-hoc → Stratum V2 transition in stages, with increasing levels of complexity and potential efficiency gains. We will follow the Stratum V2 developers’ advice of starting simple and implementing in stages. The project will proceed toward the following milestones, which allow for the exploration of more sophisticated solutions with high potential for performance while always building on a compliant, tested, and performant base:

Milestone 1 of 3:

Develop infrastructure provisioning and automated deployments utilizing the ckb blockchain within the BTCPool infrastructure (Estimated time: 4 weeks)

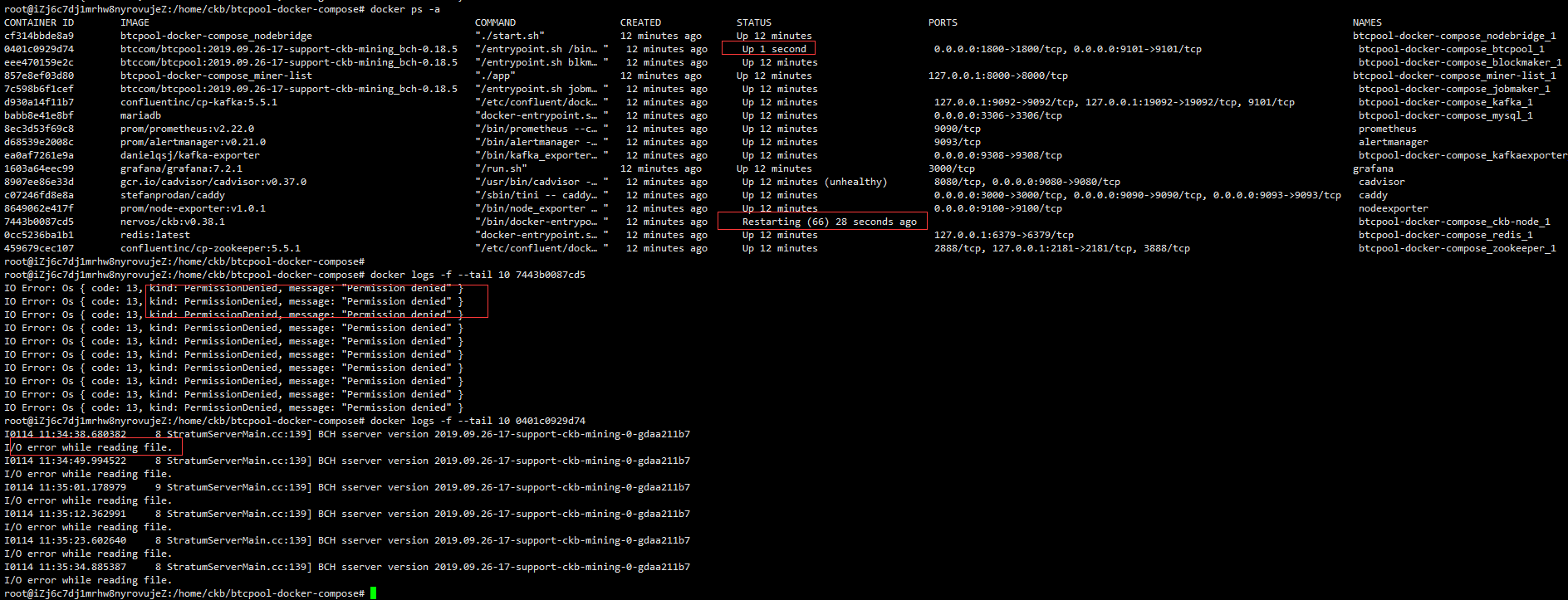

In order to empower development and facilitate adoption of the stack, we will initially focus the grant on building an automated deployment of the necessary components of the infrastructure. Development will focus on a VM based approach configured with Ansible and deploying all the dependencies on a single host environment for both cloud and on-prem. Packaging will initially be done with docker-compose to facilitate development though efforts will be made to expose the interfaces that would allow the stack to be decoupled (ie kafka / redis / etc) into a multi-host environment.

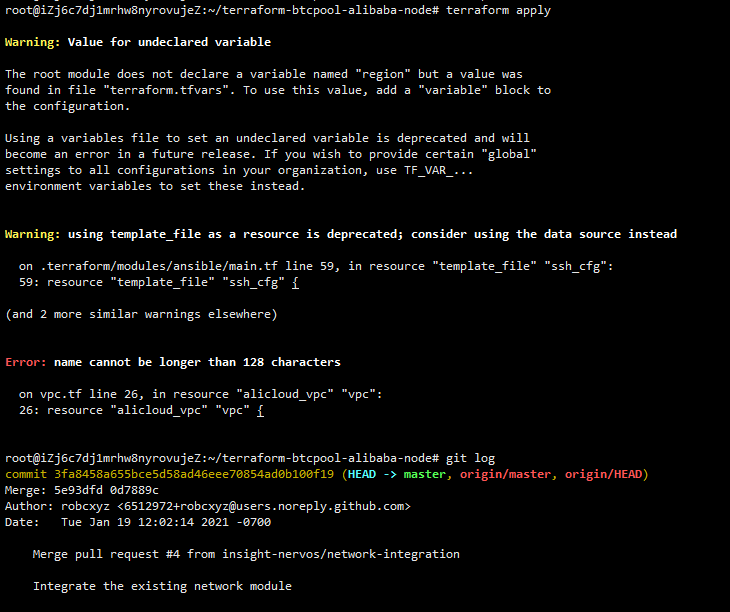

An Ansible role will be developed and uploaded to the Ansible Galaxy registry to allow users to easily run the role for both on-prem and cloud deployments. A continuous integration pipeline will be built with CircleCI to test the deployment steps in automation on each commit. Terraform modules will then be built to support a one-click deployment on AWS with options to extend into other clouds on request from foundation. Configuration will be done through a custom CLI to inform options exposed by the infrastructure as code. A suite of prometheus exporters will be integrated into the deployment with an automated deployment of prometheus to monitor the deployment.

With the infrastructure fully automated, any changes built into our design will be immediately available by redeploying the stack, thereby creating an immutable deployment pattern to support all subsequent operations.

For this milestone, we have laid out the tasks needed to complete each deliverable here, which are at a high level:

Deliverables

- Deployment of all components in containers within a single docker-compose for quick iterative testing of stack

- Ansible roles to configure hosts with relevant tooling for both on-premise and cloud

- Terraform modules to deploy stack on the cloud in a single host environment with interfaces exposed to decouple parts of the stack on AWS and Alibaba (more clouds on request)

- CLI tooling to configure and deploy the stack from one-command

Milestone 2 of 3:

Implement a ckb-miner-compatible V1 <-> V2 proxy compatible with BTCPool’s BTCAgent protocol agent. (Estimated time: 4-6 weeks)

With a running automated deployment including observability into key performance metrics, we have set the stage for a robust, staged implementation of the stratum v2 protocol.

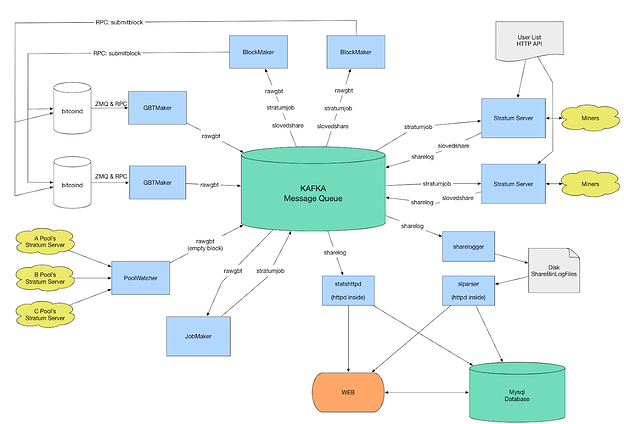

Note that stratum is designed with three layers or components: transport, application protocol, and services. The focus of this effort is the application protocol. The BTCPool codebase reflects this in its design. The pool codebase (BTCPool) provides the bulk of the transport and services components, while the BTCAgent provides the protocol component while serving as a proxy/agent for miners to communicate with the pool at high efficiency. Thus, our engineering focus will be on the BTCAgent, while being careful to maintain performance and compatibility with the BTCPool infrastructure.

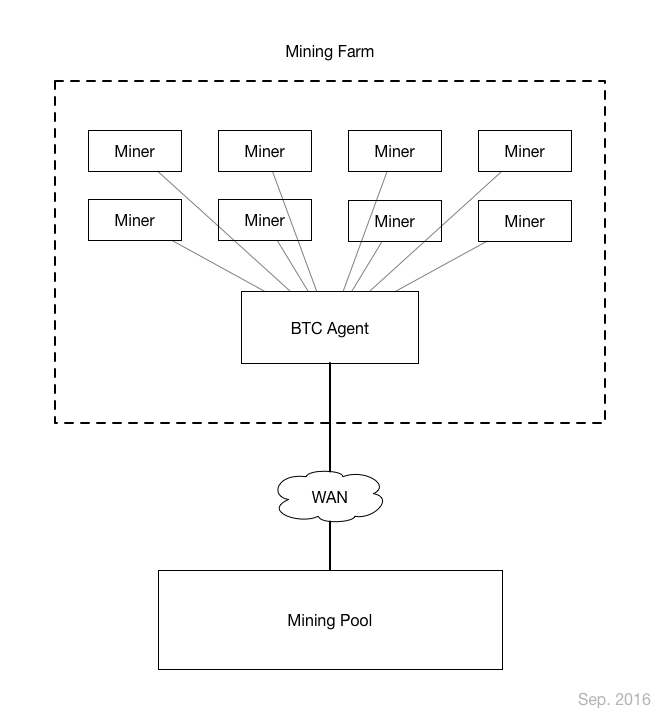

Here’s a high-level diagram of the architecture, courtesy of the BTCAgent GitHub:

In the first milestone and stage, we implement a V1 → V2 proxy that is compatible with the BTCAgent. The Braiins/Stratum team provide roadmaps and templates for this process. Full reference implementations are provided for V1 and V2, as well as a testing and simulation tool for rapid prototyping and development. Finally, a model proxy implementation is given to guide implementation within BTCAgent.

With these reference templates and existing stratum server subclasses for bitcoin and etherium already running in BTCAgent, we will deliver the following.

Deliverables:

- Implement a ckb subclass for BTCPool stratum servers, similar to existing bitcoin and ethereum implementations

- Implement the data structures (especially message types) and network/messaging flows required by the V2 application protocol (see specification, especially Mining Protocol section)

- Implement, test, and optimize for

ckba V1 -> V2 proxy, in anticipation of the next milestone (but testable with simulation tools provided by braiins and BTCPool). - Further develop and iterate on design for implementation of V2 into the pool infrastructure

Milestone 3 of 3:

Implement the Stratum V2 Mining Protocol (the minimal and only required component of Stratum V2) into the BTCAgent with connecting code contributed as needed in BTCPool. (Estimated time: 4-6 weeks)

An automated, observable deployment with a functioning V1 -> V2 proxy prepares us for the final goal: implementing the V2 protocol within the pool infrastructure.

The pool’s design allows us to continue focusing our efforts within the BTCAgent while carefully propagating changes out into BTCPool where needed. In the previous milestone, we implement fundamental data structures and the ability to generate streams of V2 messages via our translating proxy. This is through subclassing stratum servers. In this milestone, we push implementation deeper into the fundamentals classes (namely, btcpool/src/Stratum*.cc/h and btcpool/src/sserver).

Within this source, we will implement the stratum v2 binary message format and all required Mining Protocol methods.

These fundamental components will be connected and made performant by leveraging the gRPC framework, which maps well to the current JSON rpc framework while allowing for binary message formats. Protobufs for GRPC will be derived directly from the v2 protocol spec and will be uploaded to the kafka schema registry. We anticipate up to a 10x message transfer performance improvement over the current json format.

Deliverables:

- Compliant implementation of the stratum v2 Mining Protocol, the direct standardized successor to the ad-hoc v1 protocol, at the pool level.

- gRPC-based framework for connecting components and performant message streaming

- Integration and testing of

ckb-miner

With this approach, we have strategically built up an automated, stratum v2-compliant deployment in 3.5 months, our timeline target. This empowers a larger base of users and operators to get systems running and build them out further to suit their priorities.

If minimal challenges arise and milestones are met sooner than expected, further stages of implementation can be explored. These include implementation of optional protocols (Job Negotiation, Template Distribution, Job Distribution) and developing ckb-driven infrastructures/deployments that maximize potential Stratum V2 efficiency gains.

Team:

Insight’s crypto R & D team is dedicated to building infrastructure and software to tackle high-impact challenges in the blockchain space. In the last 6 months, we have executed projects that were funded by the Zcash Foundation, Web 3 Foundation, ICON Foundation, Harmony Grants, and Monero CCS.

Insight hosted Nervos during the Rust in Blockchain Workshops (fellows.link/rust2019) and are excited for this opportunity to now contribute to the Nervos ecosystem ourselves.

Project Manager: Mitchell Krawiec-Thayer

- Head of Research, Developers in Residence at Insight

- Founding Lead of the Insight Decentralized Consensus Fellows Program

- Led projects for the Zcash Foundation, Web 3 Foundation, ICON Foundation, Harmony Grants, Quantum Resistant Ledger, and Monero CCS

- Data Science and Protocol SecEng for Monero Research Lab

- GitHub, Twitter, LinkedIn, Medium

Infrastructure Automation: Rob Cannon

- Infrastructure Architect & Blockchain DevOps at Insight

- Developed one-click deployments for Polkadot, ICON, and Harmony validators

- Expert in advanced infrastructure (reference architectures) and automated deployments of infrastructure nodes for blockchain networks

- GitHub, LinkedIn

Engineer: Pranav Thirunavukkarasu (focusing on protocol upgrades for this project)

- Blockchain Engineer, Developer in Residence at Insight

- Lead Engineer on both the Zcash Observatory initiative and the Harmony blockchain explorer upgrades.

- Built a DevOps tool to spin up various local Lightning Network clusters for development and network testing purposes: fellows.link/pranav_video

- Full-stack engineering experience at Hewlett Packard Enterprise, focused on front-end user interfaces and low-level networking

- GitHub, LinkedIn, Twitter

Engineer: Richard Mah (focusing on infrastructure upgrades for this project)

- Full-stack blockchain DevOps & data engineer

- Worked on project funded by Web3 Foundation and ICON Foundation

- Blockchain data ETL expert, building high-performance data pipelines for distributed systems, crucial when we need accurate low-latency DeFi market data.

- Github, LinkedIn